The five modes of chaos engineering experimentation

Unleashing the Full Potential of Chaos Engineering — Part1

Unleashing the Full Potential of Chaos Engineering — Part1

Welcome to this two-part blog post series on chaos engineering best practices! In this series, I’ll be sharing insights and practical strategies that I’ve gathered throughout a decade of chaos engineering.

In Part 1, we’ll dive into the different modes of experimentation for chaos engineering — from the exploratory ad-hoc mode to the challenging continuous experimentation in production, I’ll walk you through each mode and highlight their unique benefits. Part 2 focuses on the essential best practices that I’ve found to be crucial for achieving success in chaos engineering.

Before we jump into Part 1, I want to take a moment to extend my sincere gratitude and appreciation to everyone who has been involved in the review and improvement process of this blog post. In particular Gorik, Rudolf, Seth, Elaine, Varun, Olga, Klara, Jason, Yilong, Alan, Laurent and Shllomi. Your valuable input, feedback, and suggestions have been instrumental in shaping the final version. I am truly grateful for your time, effort, and commitment to making this content the best it can be. It’s been a collaborative journey, and I couldn’t have done it without your support. Thank you all for your contributions.

Now, let’s dive in by first briefly discussing the concept of chaos engineering and its significance.

Chaos Engineering — what is it and why is it important?

Chaos engineering is a systematic process that involves deliberately subjecting an application to disruptive events in a risk mitigated way, closely monitoring its response, and implementing necessary improvements. Its purpose is to validate or challenge assumptions about the application’s ability to handle such disruptions. Instead of leaving these events to chance, chaos engineering empowers engineers to orchestrate controlled experiments in a controlled environment, typically during periods of low traffic and with readily available engineering support for effective mitigation.

The foundation of chaos engineering lies in following a well-defined and scientific approach. It begins with understanding the normal operating conditions, known as the steady-state, of the system under consideration. From there, a hypothesis is formulated, an experiment is designed and executed, often involving the deliberate injection of faults or disruptions. The results of the experiment are then carefully verified and analyzed, enabling valuable learning that can be leveraged to enhance the system’s resilience.

While chaos engineering primarily aims to improve the resilience of an application, its benefits extend beyond that aspect alone. It serves as a valuable tool for improving various facets of the application, including its performance, as well as uncovering latent issues that might have remained hidden otherwise. Additionally, chaos engineering helps reveal deficiencies in monitoring, observability, and alarm systems, allowing for their refinement. It also contributes to reducing recovery time and enhancing operational skills. Chaos engineering accelerates the adoption of best practices and cultivates a mindset of continuous improvement. Ultimately, it enables teams to build and hone their operational skills through regular practice and repetition.

Here is how I usually like to put it:

Chaos engineering is a compression algorithm for experience.

It is generally recommended and common with chaos engineering to focus on parts of the system that you believe are resilient and that experiments be based on a hypothesis that predicts some form of resilient behavior. This hypothesis serves as a guiding principle for the experiment, providing a clear objective and expected outcome. Resilient behavior, in this context, encompasses not only the ability to prevent failures but also the capacity to react and recover effectively when failures do occur. By formulating a hypothesis that predicts resilient behavior, teams can proactively assess the robustness of their systems and identify areas for improvement. This approach helps validate assumptions and gain deeper insights into the system’s behavior. In this context, it is essential to fulfill certain prerequisites. In particular, conduct a thorough review of your system or application, ensuring that you have identified all the application components, dependencies, and recovery procedures associated with each component. I highly recommend reviewing the AWS Well-Architected Framework, which provides valuable guidance for building secure, high-performing, resilient, and efficient infrastructure for your applications and workloads.

Additionally, hypothesis-driven (or failure-driven development) can effectively safeguard deployments from operational changes. For instance, chaos experiments can be used to ensure services have properly configured roll back monitors to handle potential deployment failures. In such cases, validating alarms through chaos experiments involves formulating hypotheses that deliberately trigger failures, like setting an alarm to activate when the CPU reaches a specific threshold. This method helps identify weaknesses and prioritize improvements in resiliency over new functional requirements, especially given the challenges faced by application teams in securing budget and prioritizing resilience.

Finally, it is important to recognize that conducting chaos experiments in production offers significant benefits due to the presence of crucial elements that non-production environments lack. However, it is advised to begin in non-production environments and gradually progress through your different environments and eventually production. This approach allows for the development of confidence and maturity.

In Part 2 of this blog post series, I will delve deeper into the topic of chaos engineering in production and explore the specific benefits it offers over non-production environments. We will discuss the challenges of replicating production complexities outside of the actual production environment and the limitations of non-production setups. I will provide insights and strategies on how to effectively and safely conduct chaos experiments using real production traffic. Stay tuned for Part 2!

The five modes of experimentation

In order to gradually and systematically build and improve the confidence and maturity, you need to understand the different ways to run experiments with chaos engineering.

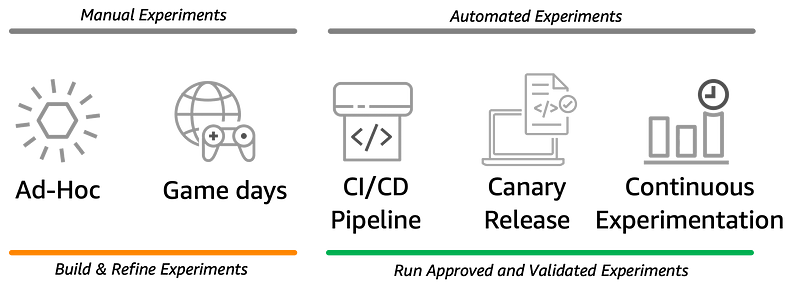

There are five main modes of experimentation: ad-hoc, GameDays, Continuous Integration and Continuous Delivery (CI/CD) pipeline, canary release, and continuous experimentation. Each mode contributes to system resilience and there may be variations in their order — but typically this is the “natural” progression.

Both the ad-hoc and GameDay modes can be considered more “manual” approaches compared to the other modes. These modes involve a more hands-on and exploratory process, where engineers actively shape and refine hypotheses through their observations and experiments.

On the other hand, the CI/CD pipeline, canary release, and continuous experimentation modes are more automated in nature. These modes focus on running approved and validated hypotheses in a controlled and iterative manner. They leverage automation and integration into the development and deployment processes to ensure consistent and repeatable experiments.

Both sets of modes serve distinct purposes in the chaos engineering journey. The ad-hoc and GameDay modes are valuable for exploring, refining, and shaping hypotheses, while the other three modes provide a framework for running experiments based on these established hypotheses in a more automated and systematic fashion.

While there are general best practices for chaos engineering, each mode is unique and so you will need to adjust the best practices and guidelines accordingly. It’s important for engineers to be familiar with these modes to maximize the effectiveness and accuracy of their efforts.

Let’s go through them.

Ad-hoc mode

The ad-hoc mode is a straightforward approach aimed at manually uncovering potential defects and errors. Ad-hoc mode involves informal and unstructured experimentation, where we rely on experienced engineers to “guess” the most likely sources of errors. This mode of experimentation is useful when time is limited or when we explore a particular technology. However, for greater effectiveness, it’s crucial to gradually formalize and automate these experiments using small scripts or snippets of code. By introducing automation, we bring consistency and repeatability to the experimentation process, enabling us to continuously identify and address potential defects.

It is important to note that ad-hoc experimentation in production systems should be very limited due to the risk of impacting customer traffic and causing outages. Instead, it is recommended to utilize ad-hoc experimentation in non-production environments. Ad-hoc experimentation serves as a valuable mode for exploration, allowing for trying out different approaches and scenarios and refining the intuition in order to form or improve a hypothesis.

GameDay mode

Then we have GameDays, which serve as simulated scenarios for teams to practice and evaluate their incident response capabilities. GameDays not only test and enhance technical capabilities but also validate processes and roles. Think of them as fire drills, but for software systems. GameDays allow teams to build the necessary skills and knowledge to respond effectively to disruptive events. By simulating potentially dangerous scenarios, we can choose when and how to engage with them, allowing us to refine our response procedures. GameDays cover critical areas such as resilience, availability, performance, operation, and security.

It’s essential to involve all personnel who are responsible for operating the workload, including individuals from operations, testing, development, security, and business operations. Integrating monitoring, alerting, and engagement into GameDays fosters a culture of continuous learning and improvement. The ultimate goal is to conduct GameDays in a production environment, further strengthening the resilience of the system. Finally, GameDays provide a unique opportunity to “graduate” specific chaos experiments and automate them.

CI/CD pipeline mode

Next, we have testing in the CI/CD pipeline, an already advanced mode of resilience experimentation. This mode involves automating experiments within the continuous integration and continuous delivery pipeline. By doing so, we can detect and fix issues early on, before they reach the production environment. The key principle here is to “fail fast” by catching and addressing bugs as early as possible, reducing costs and preventing issues from spreading further. Integrating chaos experiments into the continuous delivery workflow allows for a proactive and iterative approach to resilience experimentation.

This approach is especially valuable in the dynamic and fast-paced world of continuous delivery, where frequent changes and updates to the application are the norm. It ensures that resilience is not an afterthought but an integral part of the development process, ingrained in every step of the application’s evolution.

However, a chaos experiment CI/CD pipeline is distinct from a rapid deployment pipeline due to its nature and purpose. Unlike a rapid deployment pipeline, a chaos pipeline is triggered less frequently and involves more than just another integration test. It typically requires additional time and considerations due to the complexity of injecting faults and evaluating the system’s resilience. As a result, the chaos pipeline may have longer execution times to allow for comprehensive observation, analysis, and validation of the system’s response to injected faults. This deliberate approach ensures that the resilience of the system is thoroughly validated and optimized, mitigating potential risks before deploying to production environments.

It is also important to note that not all chaos experiments are suitable for inclusion in the CI/CD pipeline due to various factors such as complexity or potential risks. Indeed, some experiments may involve intricate setups or intricate interactions between system components, making them challenging to automate and incorporate into an automated pipeline.

Therefore, it is crucial to assess the potential complexity and weigh it against the benefits before including a chaos experiment in the CI/CD pipeline.

Canary release mode

Then, we have canary release, a strategy used in release pipelines to minimize the risks associated with introducing new features or changes. Canary release involves gradually routing a small percentage of users to a different version of the application, known as the canary version. Canary is often referred as phased or incremental rollout. This approach allows engineers to evaluate the performance of an experiment in a controlled manner. By limiting the impact to a subset of users, canary release reduces the potential blast radius of failures.

One of the significant benefits of canary release is the ability to quickly roll back to a previous version if issues arise. This mode provides engineers with a fast and relatively safe way to test new features using real production data. However, canary release presents challenges in terms of routing traffic to multiple versions of the application. Various mechanisms such as internal team vs. customer routing, geographic-based routing, feature flags/toggles, and random assignment are commonly used to address this challenge.

By carefully navigating these challenges, we can ensure smooth and seamless feature roll-outs while maintaining the resilience of the system. To learn more about this mode and how to use it on AWS in particular, check out this post.

Continuous experimentation mode

Lastly, we have continuous experimentation. Whether conducted in a non-production environment or in production, it involves conducting experiments on the entire system and all its the users. When performing continuous experimentation in a non-production environment, it is vital to ensure that the setup closely mirrors the production environment in terms of traffic patterns and load capabilities. Techniques such as traffic replay enable the replication of realistic traffic patterns and loads, facilitating valuable insights into the system’s resilience without compromising critical production systems.

To effectively support continuous experimentation in a non-production environment, it is crucial to establish robust monitoring, alerting, and incident response protocols. These strategies enable close monitoring of the system’s behavior, prompt detection of anomalies or weaknesses, and efficient incident response. It is important to approach the non-production environment with the same level of diligence and care as one would in a production environment, ensuring similar behaviors. In production, continuous experimentation involves subjecting the system to turbulent conditions, much like the Chaos Monkey tool from Netflix, which continuously terminates instances. This deliberate introduction of “chaos” continuously challenges and validates the system’s ability to withstand disruptions.

Exceptional monitoring, alerting, and incident response protocols are necessary to support continuous experimentation in production. However, it is crucial to note that continuous experimentation in production should automatically cease in the event of a wider system outage to prevent further complications. Striking a balance between resilience and responsible experimentation practices is essential for maintaining customer trust.

For production, it’s also important to consider your Service Level Objective (SLO) and error budget to maintain availability. I’ll explain more about this in Part 2.

The modes of experimentation in relation to the complexity of experiments

It is important to recognize that different chaos experiments vary in complexity and have distinct blast radius affecting the potential impact they can have on the system. For instance, terminating an instance in production differs significantly from terminating a database in production in terms of complexity and potential consequences. Therefore, it is essential to understand that the various modes of resilience experimentation are interrelated and correspond to the complexity of the experiments being performed. In other words, not all chaos experiments follow the same path through these modes of resilience testing.

When designing and conducting chaos experiments, engineers must consider the specific goals, risks, and potential impacts associated with each experiment. Simpler experiments with a narrower blast radius may be suitable for initial testing and validation, allowing engineers to gain confidence in the system’s resilience without introducing significant disruptions. On the other hand, more complex experiments with a broader blast radius should be approached cautiously, as they have the potential to cause substantial system-wide effects.

By recognizing the relationship between the modes of resilience experimentation and the complexity of the experiments, engineers can make informed decisions about which experiments to include in their resilience strategies. This understanding ensures that the appropriate experiments are selected based on the system’s characteristics, the desired objectives, and the tolerance for potential disruptions.

Striking the right balance between simplicity and complexity in chaos experiments is essential as it allows engineers to gradually progress from simpler, controlled experiments to more intricate scenarios, ensuring a comprehensive assessment of the system’s resilience while minimizing the potential for unintended consequences.

That concludes Part 1, folks. In the next post, we will delve into the essential best practices for a successful chaos engineering journey. In the meantime, please feel free to provide feedback, share your thoughts, or express your appreciation. Thank you!

Adrian

—

Subscribe to my stories here.

Join Medium for $5 — Access all of Medium + support me & others!