Perform sentiment analysis with Amazon Comprehend triggered by AWS Lambda

Build an event-driven architecture to connect a DynamoDB stream with Amazon Comprehend for sentiment analysis

Build an event-driven architecture to connect a DynamoDB stream with Amazon Comprehend for sentiment analysis

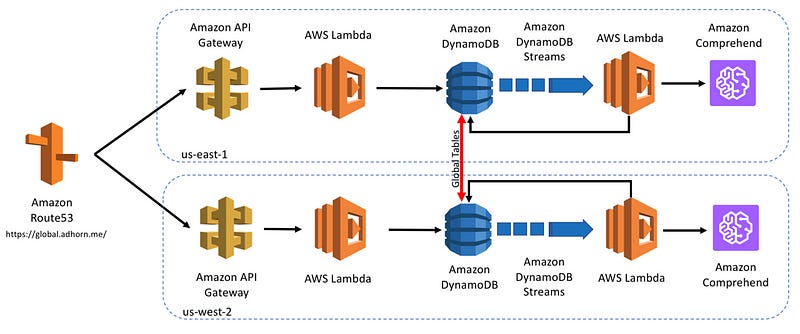

As part of my series on serverless technologies, I’ve been sharing how to build a well-architected backend with AWS services — using multi-region and active-active approaches for high availability.

In this blog, we’ll use DynamoDB streams and Amazon Comprehend to apply sentiment analysis on the items being stored in your DynamoDB Global Table that we designed and built in the prior posts.

A quick update to the API

To get started, we’ll need to make a quick update to the lambda function that handles the POST request.

The only difference compared to the previous post function is the additional session_comment which takes a string containing the comment of the user.

Once deployed, you can POST a new item with a session_comment item as follows:

The GET function does not change since it originally returned the full object queried by item_id.

Testing the GET function should return the newly added session_comment as follows:

As I previously mentioned — simple :)

Let’s talk DynamoDB streams and event-driven architectures

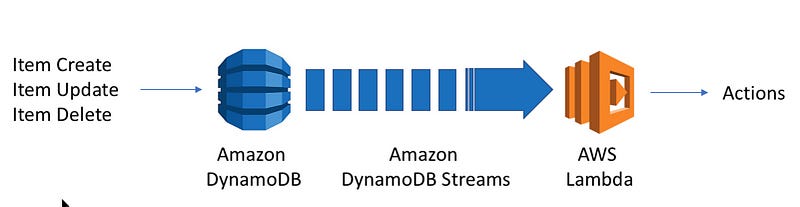

Simply put, a DynamoDB stream is a time-ordered flow of information reflecting changes to items in a DynamoDB table. Changes can reflect any of the following: Create, Update and Deletion of items in the table.

It’s important to recognize that DynamoDB Streams guarantees the following:

Each stream record appears exactly once in the stream.

For each item that is modified in a DynamoDB table, the stream records appear in the same sequence as the actual modifications to the item.

I love DynamoDB streams because it fits perfectly into my favorite architecture design paradigm: event-driven architecture. Instead of waiting or polling for changes, actions happens when changes in the system occur. Sure, we have talked about this architectural pattern for years — but until recently, it was very difficult to implement at scale.

So, what changed? AWS Lambda happened. AWS Lambda brought event-driven compute to everyone and took the event-driven paradigm to a whole new level. Today, companies like Netflix (Bless) and Capital One (Cloud Custodian) use event-driven architectures powered by AWS Lambda to do real-time security, compliance and policies management.

Going back to DynamoDB streams — hooking up your stream with AWS Lambda allows you to perform event-driven architecture using changes in the database as an event source. This is pretty amazing if you ask me — no need to pull, no need to query the database. Actions can happen when items in the database are created, updated or deleted.

In other words, you can integrate Amazon DynamoDB with AWS Lambda so that you can create triggers — pieces of code that automatically respond to events in DynamoDB Streams. With triggers, you can build applications that react to data modifications in DynamoDB tables.

Now you are probably wondering what I want to do with that …

Good question Vincent! The answer is simple — I want to do sentiment analysis on the session_comment field; I want to know if the comment is POSITIVE, NEGATIVE or NEUTRAL. And there is a simple way to do that on AWS — say hello to Amazon Comprehend.

Voice of customers: What are you talking about??

Amazon Comprehend is a natural language processing (NLP) service that uses machine learning to find insights and relationships in text. It can identify the language of the text, extracts key phrases, places, people, brands, or events. The service can also understand how positive or negative the text is. In other words — a lot of cool stuff!

One particular aspect of Comprehend that I’m very interested in exploring is the detect-sentiment API.

Yes, it is THAT simple! One line of code and you get state-of-the-art sentiment analysis in your application.

Fun fact: The Comprehend service constantly learns and improves from a variety of information sources, including Amazon.com product descriptions and consumer reviews — one of the largest natural language data sets in the world — to keep pace with the evolution of language.

Bring it all together

Now let’s glue things together and integrate DynamoDB streams with AWS Lambda and Amazon Comprehend.

First, let’s have a look into the DynamoDB stream and the objects that are being sent in it. Following is a stream object being sent after a POST request.

Here is the full object being streamed. It is basically a list of records.

The most interesting part is the field called eventName — used here as an INSERT into DynamoDB. There are several types of data modification that can be performed on the DynamoDB table:

INSERT- a new item was added to the table.MODIFY- one or more of an existing item's attributes were modified.REMOVE- the item was deleted from the table

Following is the Lambda function getting hooked into the DynamoDB stream and calling Amazon Comprehend to analyse the session_comment field and storing it back to DynamoDB. You will notice why the eventName is important.

Note: Don’t be afraid of the remove_key() or replace_decimals() functions. They are used to clean up a bit the object and make my life easier later on :)

Note 2: The funny syntax of the table.update_item() function is explained here.

The template to deploy a Lambda trigged from DynamoDB using the serverless framework is the following:

Notice the functions: streamFunction type used in the template to differentiate it from other types and the events: stream field which holds the Amazon Resource Name (ARN) of the DynamoDB stream.

To get the ARN, use the following command:

To deploy, same-same as previously:

Now it is time to test the newly augmented API.

Voilà!! You have just, with minimal efforts, integrated sentiment-analysis to your application. Bravo :-)

but .. why .. you .. do .. that???

You might be wondering — why would I choose to use this event-driven, stream-to-lambda pattern to augment the data instead of calling Amazon Comprehend directly from the client before storing the data in DynamoDB. One call instead of two?

I call this efficiency :-)

But that’s not all! By using this approach, we don’t have to give extra permissions to the client for calling Comprehend directly — limiting our security and access policy to the bare minimum.

That’s all for now folks! I hope this was informative. For the next post in this series, we’ll move into resiliency and chaos engineering — stay tuned!