Caching for Resiliency

As noted in the New York Times article: “For Impatient Web Users, an Eye Blink Is Just Too Long to Wait,” a user’s perception of quality and a good experience is directly correlated to the speed at which content is delivered to them. Speed matters, and four out of five users will click away if loading takes too long. In fact, research shows that 250ms will provide a competitive advantage to the fastest of two competing solutions.

In 2007, Greg Linden, who previously worked at Amazon, stated that through A/B testing, he tried delaying a retail website page loading time in increments of 100ms, and found that even small delays would result in substantial and costly drops in revenue. With every 100ms increase in load time, sales dropped one percent. At the scale of Amazon, it’s clear that speed matters.

Content providers use caching techniques to get content to users faster. Cached content is served as if it is local to users, improving the delivery of static content.

A cache, is a hardware or software component that stores data so future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation, or the duplicate of data stored elsewhere. More at Wikipedia

While caching is often associated with accelerating content delivery, it is also important from a resiliency standpoint. We’ll explore this in more detail in this post.

Type of Caching

Static and Dynamic Content Caching

Traditionally, it’s been standard practice to consider caching for static content only — content that rarely or never changes throughout the life of the application, for example: images, videos, CSS, JavaScript, and fonts. Content providers across the globe use content delivery networks (CDN) such as Amazon CloudFront to get content to users faster, especially when the content is static. Using a globally distributed network of caching servers, static content is served as if it is local to users, improving delivery. While CloudFront can be used to deliver static content, it can also be used to deliver dynamic, streaming, and interactive content. It uses persistent TCP connections and variable time-to-live (TTL) to accelerate the delivery of content, even if it cannot be cached.

From a resilience standpoint, a CDN can improve the DDoS resilience of your application when you serve web application traffic from edge locations distributed around the world. CloudFront, for example, only accepts well-formed connections, and therefore prevents common DDoS attacks such as SYN floods and UDP reflection attacks from reaching your origin.

Since DDoS attacks are often geographically isolated close to the source, using a CDN can greatly improve your ability to continue serving traffic to end users during larger DDoS attacks. To learn more about DDoS resiliency, check out this white paper.

Another caching technique used to serve static web content is called page caching. This is where rendered output on a given web page is stored in files in order to avoid potentially time-consuming queries to your origin. Page caching is simple, and is an effective way of speeding up content delivery. It is often used by search engines to deliver search queries to end users faster.

From a resiliency point of view, the real benefit of caching applies to caching dynamic content: data that changes fast and that is not unique-per-request. Caching short but not unique-per-request content is useful since even within a short timeframe, that content might be fetched thousands or even millions of times — which could have a devastating effect on the database.

You can use in-memory caching techniques to speed up dynamic applications. These techniques, as the name suggests, cache data and objects in memory to reduce the number of requests to primary storage sources, often databases. In-memory caching offerings include caching software such as Redis or Memcached, or they can be integrated with the database itself — as is the case with DAX for DynamoDB .

Caching for Resiliency

Here are some benefits of caching related to resiliency:

Improved application scalability: Data stored in the cache can be retrieved and delivered faster to end-users, thus avoiding a long network request to the database or to external services. Moving some responsibilities for delivering content to the cache allows the core application service (the business logic) to be less utilized and take more incoming requests — and thus scale more easily.

Reduce load on downstream services: By storing data in the cache, you decrease load on downstream dependency and relieve the database from serving the same frequently-accessed data over and over again, saving downstream resources.

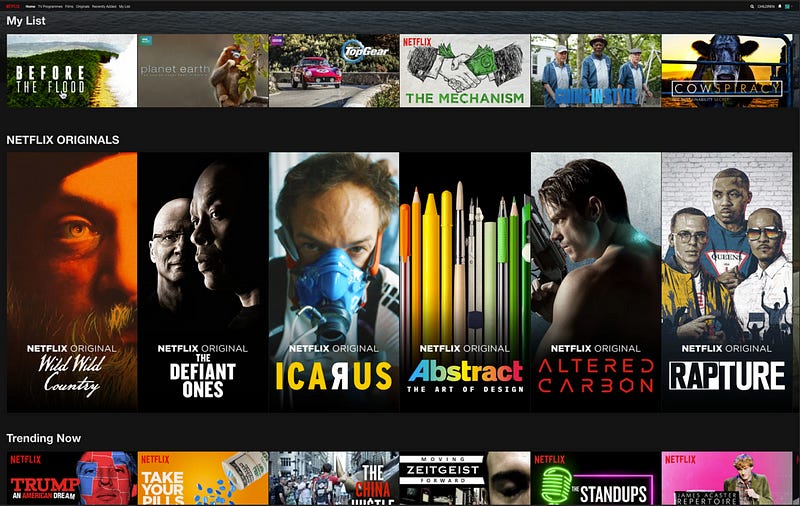

Improved degradation: Cached data can be used to populate applications’ user interfaces even if dependency is temporarily unavailable. Take Netflix’s home screen (see below). If a recommendation engine fails to deliver personalized content to me, see “My List” from the image below, they can, and will always show me something else like “Netflix Originals”, “Trending Now”, or similar. These last two content collections, like most of the home screen on Netflix, are directly served from the cache. This is call graceful degradation.

Lower overall solution cost: Using cached data can help reduce overall solution costs, especially for pay-per-request type of services; and also save precious temporary results of expensive and complex operations so that subsequent calls don’t have to be processed.

All the benefits listed above not only contribute to improved performance, but also to improving the resiliency and availability of applications.

However, using caches is not without its challenges. If not well understood, caching may severely impact application resiliency and availability.

Dealing with (In)consistency

Caching dynamic content means that changes performed on the primary storage (e.g. the database) are not immediately reflected in the cache. The result of this lag, or inconsistency between the primary storage and the cache, is referred to as eventual consistency.

To control that inconsistency, clients — and especially user interfaces — must be tolerant to eventual consistency. This is referred to as stale state. Stale state, or stale data, is data that does not reflect reality. Cached data should always be considered and treated as stale. Determining whether your application can tolerate stale data is the key to caching.

For every application out there, there is an acceptable level of staleness in the data.

For example, if your application is serving a weather forecast to the public on a website, staleness is acceptable, with a disclaimer at the bottom on the page that the forecast might be few minutes old. But when serving up that forecast for in-flight airline pilot information, you want the latest and most accurate information.

Cache Expiration and Eviction

One of the most challenging aspects to designing caching implementations is dealing with data staleness. It’s important to pick the right cache expiration policy and avoid unnecessary cache eviction.

An expired cached object is one that has run out of time-to-live (TTL), and should be removed from the cache. Associating a TTL with cached objects depends on the client requirements, and its tolerance to stale data.

An evicted cached object, however, is one that is removed from the cache because the cache has run out of memory, because the cache was not sized correctly. Expiration is a regular part of the caching process. Eviction, on the other hand, can cause unnecessary performance problems.

To avoid unnecessary eviction, pick the right cache size for your application and request pattern. In other words, you should have a good understanding of the volume of requests your application needs to support, and the distribution of cached objects across these requests. This information is hard to get right and often requires real production traffic.

Therefore, it is common to first (guess)estimate the cache size, and once in production, emit accurate cache metrics such as cache-hits and cache-misses, expiration vs. eviction counts, and requests volume to downstream services. Once you have that information, you can adjust the cache size to ensure the highest cache-hit ratio, and thus the best cache performance.

One must never forget to go back and adjust the above (guess)estimate. This can lead to capacity issues, which can in turn, lead to unexpected behavior and failures.

Caching Patterns

There are two basic caching patterns, and they govern how an application writes to and reads from the cache: (1) Cache-aside; and (2) Cache-as-SoR (System of Record) — also called inline-cache.

Cache-aside

In a cache-aside pattern, the application treats the cache as a different component to the SoR (e.g. the database). The application code first queries the data from the cache, and if the cache contains the queried piece of data, it retrieves it from the cache, bypassing the database. If the cache does not contain the queried piece of data, the application code has to fetch it directly from the database before returning it. It then stores the data in the cache. In python, a cache-aside pattern looks something like this:

def get_object(key):

object = cache.get(key)

if not object:

object = database.get(key)

cache.set(key, object)

return objectThe most common cache-aside systems are Memcached and Redis. Both engines are supported by Amazon ElastiCache, which works as an in-memory data store and cache to support applications requiring sub-millisecond response times.

Inline-cache

In an inline-cache pattern, the application uses the cache as though it was the primary storage — making no distinction in the code between the cache and the database. This patterns delegates reading and writing activities to the cache. Inline-cache often uses a read-and-write-through pattern (sometimes but rarely write-behind) transparently to the application code. In python, an inline-cache pattern looks something like this:

def get_object(key):

return inline-cache.get(key)In other words, with an inline-cache pattern, you don’t see the interaction with the database — the cache handles that for you.

Inline-cache patterns are easier to maintain since the cache logic resides outside of the application and the developer doesn’t have to worry about the cache. However, it reduces the observability to the request path, and can lead to hard-to-deal-with situations, since if the inline-cache becomes unavailable or fails, the client has no way to compensate.

On AWS, you can use standard inline-cache implementations for HTTP caching such as nginx or varnish, or implementation-specific ones such as DAX for DynamoDB.

Considerations on Write-Around Strategy

When you write an item into an inline-cache, the cache will often ensure that the cached item is synchronized with the item as it exists in the database. This pattern is helpful for read-heavy applications. However, if another application needs to write directly into the database table without using the cache as write-through, the item in the cache will no longer be in sync with the database: it will be inconsistent. This will be the case until the item is evicted from the cache, or until another request updates the item using the write-through cache.

If your application needs to write large quantities of data to the database, it might make sense to bypass the cache and write the data directly to the database. Such a write-around strategy will reduce write latency. After all, the cache introduced middle nodes, and thus increases latency. If you decide to use a write-around strategy, be consistent and don’t mix write-through with write-around, or the cached item will become out-of-sync with the data in the database. Also, remember that inline-cache will only populate the cache at read time. That way, you ensure that the most frequently-read data is cached — and not the most-frequently written data.

Final Considerations on Resiliency

Soft and hard-TTL

While stale content needs to eventually expire from a resilience point of view, it should also be served when the origin server is unavailable, even if the TTL is expired, providing resiliency, high availability and graceful degradation at times of peak load or failure of the origin server. To cope with such requirement, some caching frameworks support a soft-TTL and a hard-TTL; where cached content is refreshed after an expired soft-TTL. If refreshing fails, the caching service will continue serving the stale content until the hard-TTL expires.

Request coalescing

Another consideration is when thousands of clients request the same piece of data at almost the same time, but that request is a cache-miss. This can cause large, simultaneous numbers of requests to hit the downstream storage, which can lead to exhaustion. These situations often occur during startup time, at restart, or fast scaling up times. To avoid such scenarios, some implementation support request coalescing — or “waiting rooms” — where simultaneous cache misses collapse into a single request to the downstream storage. DAX for DynamoDB supports request coalescing automatically (within each node of the distributed caching cluster fronting DynamoDB), while Varnish and Nginx have configurations to enable it.

Final thoughts

When deploying applications in the cloud, you will have to use a collection of all the different types of cache and techniques mentioned in this article. There is no silver bullet, however, so you will have to work with all the people involved in designing, building, deploying and maintening the application — from the UI designer to the backend developer — everyone should be consulted so that you know the what, the when and the where of using caching.

Wrapping up

That’s it for today folks! I hope you enjoyed this post. Please don’t hesitate to provide feedback, or share your opinions.

What should Part 5 be? Please let me know!

Direct links to previous posts:

Part 1 — Embracing Failure at Scale

Part 2 — Avoiding Cascading Failures

Part 3 — Preventing Service Failures with Health Check

-Adrian