I deleted a production database

And learned a few things along the way

And learned a few things along the way

It happened more than a decade ago. While I might have forgotten some technical details, I remember how I felt like it was yesterday.

“I am done. It is the end, and I will be fired now.”

These were the words I kept repeating to myself.

I had just deleted a production database critical to our customers.

It was late evening, and I had been debugging an issue with one of our services, and I was ready to call it a day and go home.

All of a sudden, monitoring alarms started to go off. Requests were timing out at an unprecedented rate.

Our customer, already on the phone with the leadership team, could have been happier.

We had told them that increasing the load on our service by a factor of ten overnight wouldn’t be an issue.

And why would it be an issue? Everything worked perfectly in the test environment.

Yet, there I was, staring at the logs.

HTTP status 504: Gateway timeout server response timeoutHTTP Status 504, or Gateway Timeout error, typically means that the web server didn’t receive a timely response from an upstream server it needed to access to complete the request.

Why was the server timing out?

After digging into the server logs, I noticed something strange. The application, written in Python, was failing most of the calls accessing the database. Unfortunately, that exception was being thrown from a poorly written try/except statement. The original exception was caught but retained in a variable, then re-raised explicitly.

In Python, when the code gets an exception, the traceback typically gives the information about what went wrong.

Unfortunately, that poorly written try/except statement did not preserve the full traceback of the error. It was initially designed so that the application could rollback on database transactions if an error occurred but commit otherwise; first, catch the exception in the abstraction layer, then rollback, and then throw the exception again to propagate the error handling.

Except that now, it wasn’t.

In the hope of better luck, I logged on to the database to search for slow queries. When the slow query log feature is enabled on MySQL, it will, by default, log any query that takes longer than 10 seconds to execute. Since the timeout on the client was 5 seconds, I changed the long_query_time parameter setting to 5 seconds.

I logged queries for a while and reverted. Slow query logging increases the I/O workload on the system and can fill the disk space. The last thing I wanted was to fill up the database’s disk.

Looking at the MySQL process log, I noticed a long list of queries with the following:

Waiting for table level lock

A quick look at the show processlist; confirmed my fear.

The problem was coming from a database UPDATE query like the following:

UPDATE counter

SET count = count + 1

WHERE id = <id>Looking at the table, I realized that id wasn’t an index and that the UPDATE query was potentially locking the table when updating the value.

The problem is that when MySQL releases the lock, it is given to other processes in the write lock queue first, then to the threads in the read lock queue. And, if there are many UPDATES for a table, SELECT statements will wait until there are no more updates.

And indeed, the logs confirmed the issue. There were too many connection errors. The database was refusing new connections, and connection pools, across the fleet, were exhausted by the large number of UPDATE queries pilling up in the database.

On MySQL, the number of connections is controlled by the max_connections system variable, with a default value of 151. It is also a dynamic system variable that can be set at runtime.

To support more connections and release the queue, I set max_connections to a larger value.

While trying my best to fix the issue, I could not stop thinking.

How did I let that code get into Production?

I had to find a fix and bring the system back up as soon as possible.

I kept repeating to myself.

Rollback, stop the bleeding, and understand later.

I couldn’t rollback. We had merged that “feature” months ago. Rolling back could introduce even more issues.

Instead, I had to stop the bleeding, and get rid of these UPDATE queries.

These queries were used to update counters.

The application’s API methods were wrapped inside a decorator function that increased a counter, using the UPDATE query , by 1.

The purpose of that query was simple. The result was deadly.

It had been “hacked” together for reporting purposes and was necessary for our customers and sales team. I couldn’t just get rid of it.

Reporting was supposed to be done using a proper data warehouse, but that project was behind schedule.

Stop the bleeding; understand after

I had to find a way to move that UPDATE query out of the database.

Fortunately, I remembered reading an article from the Instagram engineering blog. Back then, these folks were pushing the envelope.

In that article, they explained how they mapped of about 300 million photos to the user IDs and turned to Redis, an advanced key-value store, to solve their problems.

I knew the current fix to increase the maximum number of database connections only saved me time.

I had to find a proper solution — fast.

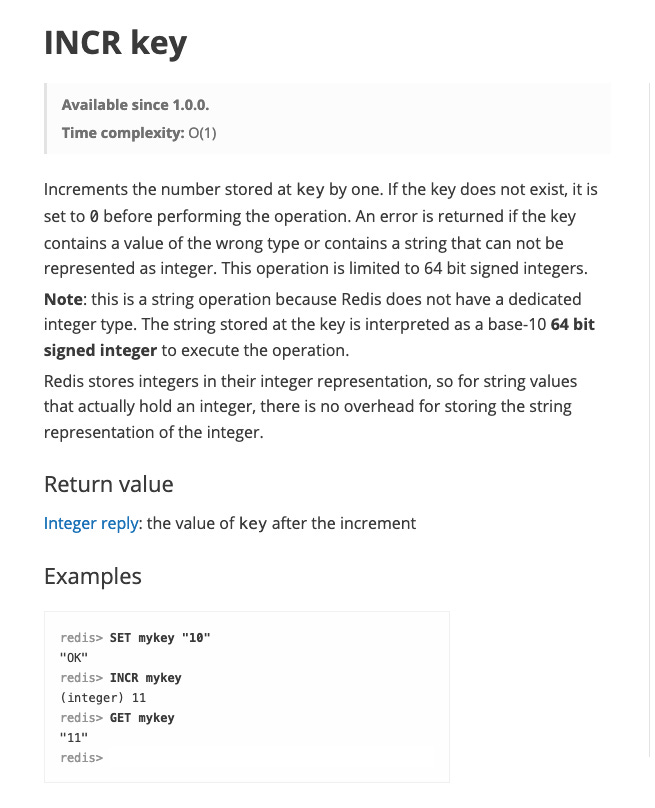

Inspired by Instagram’s success story, I, too, turned to Redis. In particular, I used the counter pattern using the INCR key.

Since we already used Redis in another part of the system, I decided I would use it for that counter.

Thanks to a nice abstraction one of my colleagues implemented, I quickly removed all the UPDATE queries from the database and instead implemented the counters into Redis.

I had something that looked like a potential fix in a relatively short time.

The Instagram Engineering team was correct. Redis worked like a charm.

I just had to test that fix before deploying it to Production.

All the tests passed successfully — at least locally.

I connected to the Test environment, deployed the fix, and started to load test this new feature.

All seemed good. The results were better than I expected. High load, very low latency — it looked good!

I repeated the load test a few more times with different load parameters.

Between each test run, I had to manually delete the Test database because our tests weren’t random enough, and collision was a standard issue during testing.

We needed more time to improve our tests. Time we didn’t have.

But it didn’t matter then. In a short time, I had become good at connecting to the database, dropping the database, and running the tests.

mysql -h dbhost -u name -ppassword db

DROP DATABASE DB;

./run_tests --all --create_db true

(repeat)During one of the tests, I received a call from my leadership.

Our customer wasn’t happy, and I had to push the fix immediately.

OK — one more test, and then I push.

I connected to the database and dropped the database to run the tests one last time.

DROP DATABASE DB;

And pressed enter.

The query took longer than expected to execute, longer than any of the previous times.

Something wasn’t right, but it was already too late.

Eventually, the command finished.

My heart was already pounding like it knew what was coming.

I quickly realized what had happened.

To my horror, I mistakenly connected to the Production environment and deleted the Production database.

Happy ending

Fortunately, we had backups of the database; recovering it actually worked and didn’t take too long.

We lost some data, but it could have been much worse, considering I had deleted the production database.

Saying I was traumatized by what happened that day is an understatement.

I felt guilty. I felt terrible.

After recovering our service, I called our customer and apologized for my mistake.

When I hung up the phone, I broke down.

It took me a long time to recover.

Rather than focusing on the failure, we looked at ways to prevent it from happening again.

I was lucky. Many people who have been publicly shamed and fired for making mistakes.

We looked at our tooling and found ways to ensure mistaking Production with Test environment would be impossible.

We looked at the database and found ways to prevent accidental deletion.

We made it difficult to connect to production without realizing it.

We got rid of the bastion hosts and instead developed immutable architectures.

We looked at our culture and implemented war rooms with a person dedicated to communication and the rest dedicated to fixing issues.

We focused on the how instead of the what.

We turned failure into a learning experience.

What we learned

Making mistaking production with test environment difficult

At the time of this incident, we used a bastion host to connect to our applications.

A bastion host is a server whose purpose is to provide access to a private network from an external network, such as the Internet. You can use a bastion host to mitigate the risk of allowing SSH connections from an external network.

The first change we made to the bastion hosts was to change its SSH after-login screen and replace the standard EC2 SSH message.

From […]

[…] to one reflecting the environment in which the bastion host was operating (here Production).

You can edit the /etc/motd file in the bastion host instance.

$ sudo vi /etc/motd

______ _ _ _

| ___ \ | | | | (_)

| |_/ / __ ___ __| |_ _ ___| |_ _ ___ _ __

| __/ '__/ _ \ / _` | | | |/ __| __| |/ _ \| '_ \

| | | | | (_) | (_| | |_| | (__| |_| | (_) | | | |

\_| |_| \___/ \__,_|\__,_|\___|\__|_|\___/|_| |_|While it might seem trivial and almost silly, using visual cues like that is a handy and efficient way to alert people.

Visual cues are often used in safety engineering to reduce workplace injuries or fire hazards and prevent medical errors.

Our second improvement was adding the environmental information to the aliases in the /etc/hosts file for the bastion hosts.

From […]

127.0.0.1 localhost

10.0.38.206 ec2-54-237-214-114.compute-1.amazonaws.com dbhost

10.0.38.202 ec2-54-233-212-111.compute-1.amazonaws.com app1

[...][…] to

127.0.0.1 localhost

10.0.38.206 ec2-54-237-214-114.compute-1.amazonaws.com prod_dbhost

10.0.38.202 ec2-54-233-212-111.compute-1.amazonaws.com prod_app1

[...]Again, this a simple change, but it prevented us from copy-pasting commands between environments and thus helped prevent human errors.

For example, connecting to the database from the bastion hosts in the Production or Test environment using the original command was no longer possible.

From […]

mysql -h dbhost -u name -ppassword db[…] to

mysql -h prod_dbhost -u name -pprod_password db

and

mysql -h test_dbhost -u name -ptest_password dbPrevent accidental database deletion

We started by creating users with restricted permissions. It is a good practice not to give users ALL permissions on the databases. Instead, we used the GRANT permission on databases and tables independently and more precisely.

From example:

GRANT SELECT, INSERT ON database.* TO 'someuser'@'somehost';

GRANT ALL ON database.table TO 'someuser'@'somehost';We also investigated database triggers. In (My)SQL, a trigger is a stored program invoked automatically in response to an event such as insert, update, or delete in the associated table.

For example, you can use triggers for MySQL databases to prevent deletion in a particular table.

mysql> SELECT * FROM employees;

+--------+------------+-----------+

| emp_no | first_name | last_name |

+--------+------------+-----------+

| 1 | John | Doe |

| 2 | Mary | Jane |

+--------+------------+-----------+

2 rows in set (0.00 sec)

mysql> DELIMITER $$

mysql> CREATE TRIGGER TRG_PreventDeletion

-> BEFORE DELETE

-> ON employees

-> FOR EACH ROW

-> BEGIN

-> SIGNAL SQLSTATE '45000'

-> SET MESSAGE_TEXT = 'Delete not allowed!';

-> END $$

Query OK, 0 rows affected (0.00 sec)

mysql> DELETE FROM employees WHERE emp_no = 2;

ERROR 1644 (45000): Delete not allowed!You can even use triggers on SQL Servers to prevent dropping the databases.

1>

2> CREATE TRIGGER TRG_PreventDropDB ON ALL SERVER

3> FOR DROP_DATABASE

4> AS

5> BEGIN

6> RAISERROR('Dropping databases disabled on this server.', 16,1);

7> ROLLBACK;

8> END

9> GO

1>

2> DROP DATABASE TestDB;

3> GO

Msg 50000, Level 16, State 1, Server sql1, Procedure TRG_PreventDropDB, Line 6

Dropping databases disabled on this server.If you use Amazon DynamoDB, you can use an IAM role to prevent deleting databases by prohibiting the DeleteTable operation using the following IAM policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": ["dynamodb:DeleteTable"],

"Effect": "Deny",

"Resource": "*"

}

]

}In general, we learned to work with least-privilege permissions. It means that when we set permissions, we only granted the permissions required to perform the task required — no more. We typically started with broad permissions while we tested or explored the permissions necessary for our use case. But as our use case matured, especially for Production, we worked to reduce the permissions we granted toward the least privilege.

Immutable architectures

After the incident, we started questioning our need for SSH to connect to our bastion hosts, applications, and databases. It led us to explore immutable architectures.

The principle of immutable architectures is relatively simple: Immutable components are replaced for every deployment rather than being updated.

No updates are performed on live systems.

You always start from a new instance of the resource you are provisioning.

This deployment strategy supports the principle of Golden AMI. It is based on the Immutable Server pattern, which I love since it reduces configuration drift and ensures deployments are repeatable anywhere from the source.

If our instances can be disposed of and replaced rather than updated, why should we allow SSH?

And indeed, immutable infrastructure also transforms security.

No Keys on servers = better security

SSH turned OFF = better security

Mutability is one of the most critical attack vectors for cyber crimes.

When a bad actor attacks a host, it will often try to modify servers in some way — for example, changing configuration files, opening network ports, replacing binaries, modifying libraries, or injecting new code.

While detecting such attacks is essential, preventing them is much more critical.

In a mutable system, how do you guarantee that changes performed to a server are legitimate? Once a bad actor has the credentials, you can’t know anymore.

The best strategy you can leverage is immutability and denying all changes to the server.

A change means the server is compromised and should either be quarantined, stopped, or terminated immediately.

That is DevSecOps at its best!

Detect. Isolate. Replace.

Extending the idea to cloud operations, the immutability paradigm lets you monitor any unauthorized changes happening in the infrastructure.

To read more about the importance of Immutable Infrastructure, you can check this blog post.

Postmortems

Even though I deleted the database and still feel stupid about it today, it was clear that the conditions were such that mistakes were waiting to happen.

We understood that there was more to my mistake, and thus, we avoided labeling it a “human error.” The label ‘human error’ is prejudicial and hides much more than it reveals about how a system functions or malfunctions.

In the book Behind Human Error, researchers argue that failures occur in complex socio-technical systems and that behind every “human error,” there is a second story.

In engineering, learning from mistakes is done by doing a postmortem.

That’s what we did.

The key to learning with that process is to be blameless and not focus on human error.

Blameless means we investigate mistakes by focusing on the circumstantial factors of a failure and the decision-making process of individuals and the organization involved.

The key to blameless learning is to ask the right “Learner” questions and to create an environment that is safe to fail without fear of punishment. Creating a safe environment to fail is critical because engineers who think they will be fired are disincentivized to learn from their mistakes and prevent their re-occurrence. That is how failures repeat themselves.

By being blameless, organizations come out safer than they would if they had punished people for their mistakes.

To learn more about the blameless postmortem, read Blameless Postmortems and a Just Culture by John Allspaw.

Incident response and beyond

Finally, we looked at our incident response, or the lack thereof.

I got myself a copy of Incident Management for Operations authored by Rob Schnepp, Ron Vidal, and Chris Hawley of Blackrock 3 Partners.

The book discusses using the Incident Management System (IMS), pioneered in emergency services for fighting wildfires, in managing technology outages.

I love this book. It is full or handy information and helpful mnemonics.

CAN— Conditions, Actions, Needs

STAR — Size up, Triage, Act, Review

TIME — Tone, Interaction, Management, Engagement

That book taught us new ways to respond to and resolve incidents.

Towards fault injection

Beyond learning a lot from that book, the forewords from Jesse Robbins really challenged my thinking.

Jesse explains:

This book originated from an argument during my first year as Amazon’s “Master of Disaster,” as I began applying the incident management and operations practices I learned as a firefighter to improve Amazon’s overall reliability and resiliency.

These forewords put me on a mission to learn as much as possible about his work at Amazon.

In the early 2000s, Jesse created and led a program called GameDay, inspired by his experience training as a firefighter. The GameDay program was designed to test, train and prepare Amazon systems, software, and people to respond to a disaster.

Just as firefighters train to build an intuition to fight live fires, Jesse aimed to help his team build an intuition against live, large-scale catastrophic failures.

GameDay was designed to increase the resilience of Amazon’s retail website by purposely injecting failures into critical systems.

Initially, GameDay started company-wide announcements that an exercise — sometimes as large as full-scale data-center destruction — would happen. Little detail about the activity was given, and the team had just a few months to prepare. The focal point was ensuring single regional points of failure would be tackled and eliminated.

During these exercises, tools and processes such as monitoring, alerts, and on-calls were used to test and expose flows in the standard incident-response capabilities. GameDays became really good at surfacing classic architectural defects but would sometimes also reveal “latent defects” — problems that appear only because of the failure you’ve triggered. For example, incident management systems critical to recovery fail due to unknown dependencies triggered by the fault injected.

As the company grew, so did the theoretical blast radius of these GameDays, and these exercises were eventually halted as the potential impact on customers grew too large. These exercises have since evolved into different compartmentalized programs with the intent to exercise failure modes without causing any customer impact.

It is incredible to realize that, today, I am working in one of these programs — AWS Fault Injection Simulator, a managed service that lets customers inject fault in AWS workloads.

We are carrying on with what Jesse started almost two decades ago.

Deleting that database wasn’t that bad after all.

I still wouldn’t recommend though :)

Check out Jesse’s talk and the associated blog post to learn more about his work at Amazon.

That’s all for now, folks. I hope you have enjoyed this post. Don’t hesitate to give me feedback, share your opinion, or clap your hands. Thank you!

Adrian

—

Subscribe to my stories here.

Join Medium for $5 — Access all of Medium + support me & others!