Build a serverless multi-region, active-active backend solution in an hour

The solution is built using DynamoDB Global Tables, AWS Lambda, regional API Gateway, and Route53 routing policies

The solution is built using DynamoDB Global Tables, AWS Lambda, regional API Gateway, and Route53 routing policies

In the previous posts, we explored availability and reliability and the needs and means of building a multi-region, active-active architecture on AWS. In this blog post, I will walk you through the steps needed to build and deploy a serverless multi-region, active-active backend.

This actually blows my mind — since I will be able to explain this in one blog post and you should be able to deploy a fully functional backend in about an hour. In contrast, few years ago it would have required a lot more expertise, work, time and money!

So first, let’s take a look at the final solution, so we have an idea of what’s coming.

An overview of the solution

The solution presented here is fairly simple. It leverages DynamoDB Global Tables which provides a fully managed multi-region, multi-master database.

We’ll also leverage AWS Lambda for the business logic and the new regional API endpoint in API Gateway. Finally, our solution uses Route53 routing policies to dynamically route traffic between two AWS regions.

Let’s get started!

Step #1: Creating a Global Table in DynamoDB

Remember that DynamoDB global table consists of multiple replica tables, one per region of choice (currently 5 regions are supported), that DynamoDB treats as a single unit. Every replica has the same table name and the same primary key schema.

To start creating a global table, open the DynamoDB console create a table with a primary key. In our example, we’ll use MyGlobalTable with item_id as a primary key and click Create. This table will serve as the first replica table in the global table.

Once the table is created, select the Global Tables tab

You will notice that the create a global table

You should enable DynamoDB streams

Click Enable streams

Note: You will notice a pop-up mentioning the view type of stream that is being used — New and old images. This simply means that both the new and the old images of the item in the table will be written to the stream whenever data in the table is modified. This stream is, of course, used to replicate the data across regions.

You can then add regions to your global table where you want to deploy replica tables.

This will start the table creation process in the region of your choice. After a few seconds, you should be able to see the different regions forming your newly created global table.

You can do the same thing with the AWS CLI — and in fact it is encouraged!

You can then test the global table with the following:

Have you noticed the new fields created by DynamoDB Global Table? The cross-region replication process adds the aws:rep:updateregion and the aws:rep:updatetime attributes so you can track the item’s origin; both field can be used by your application but should not be modified of course.

Step #2: Creating the backend using AWS Lambda and API Gateway

Since this part of our solutions leverages the new regional API endpoints, let’s check that out first.

API Gateway Regional Endpoints

A regional API endpoint is a new type of endpoint that is accessed from the same AWS region in which your REST API is deployed. This improves request latency when API requests originate from the same region as your REST API.

Backend functions

For demo purposes, we’ll create three functions; one to post an item into DynamoDB, one to get an item from DynamoDB and a health check to ensure the backend is healthy.

Note: Did you notice the extreme complexity of the health function? I know… this is done so I can easily test the Failover mechanism in Route53 later on. Be smarter than me, please :)

To deploy these functions, let’s use the serverless framework. Why? I have been using it since it was initially launched as Jaws and I like it — it’s that simple. You can also do the same thing with the AWS Serverless Application Model SAM.

The template to deploy the APIs using the serverless framework is the following:

Note: Please make sure to replace the “xxxxxxxxxxxxx” in the serverless.yml file with your AWS Account ID in which the previously DynamoDB tables were created.

Deploy the API in both Ireland and Frankfurt — or the regions you used for your DynamoDB tables.

Note: If you get an error in the deployment regarding the endpointType, comment it out in the serverless.yml file and deploy again. You can then log into the API Gateway console and turn the endpoint type into a regional one manually.

Testing the regional APIs

Since we’ve already inserted a “foobar” item in the database earlier, we can try accessing it through the rest API.

Great! Our item is accessible from both regions. Now let’s create a new one.

Wonderful — it is working.

Step #3: Creating the Custom Domain Names

Next, let’s create an Amazon API Gateway custom domain name endpoint for these regional API endpoints. In order to do that, you must have a hosted zone and domain available to use in Route 53 — as well as an SSL certificate that you use with your specific domain name.

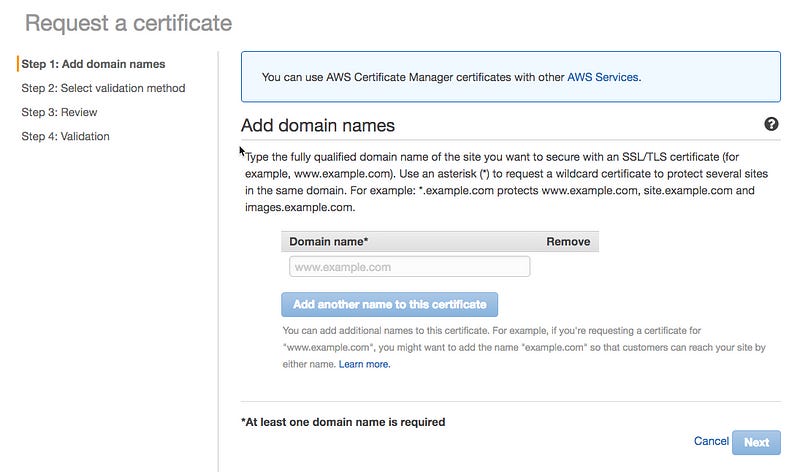

Now request an SSL certificate from AWS Certificate Manager (ACM) in each region where you have deployed the backend with API Gateway and AWS Lambda.

At the end of these steps, it will ask you to verify your request either by email or by adding a special record set into your Route 53 DNS configuration.

Once everything is verified, you can configure your API Gateway endpoints in each region to have a custom domain name. In both regions, you are in fact configuring the custom domain name to be the same. Notice the base path mapping needs to be on the root path ‘/’ and the destination, the API previously deployed, together with the stage variable — here dev.

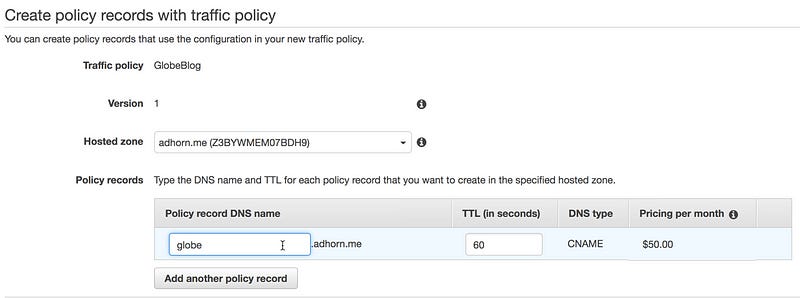

Note: Make sure the name of that custom domain name in the name field matches your domain name or it will simply not work (I used globe.adhorn.me).

Once you have configured the new custom domain name, grab the Target Domain Name — they are needed in the final steps.

Step #4: Adding health checks in Route 53

Once you have two or more resources that perform the same function, such as our multi-region, active-active backend, you can use the health-checking feature of Route 53 to route traffic only to the “healthy” resources.

Let’s configure our health check using the health API previously deployed.

Do the same for the each region’s health check API. Once complete, you should be able to see the health status turn to green in the health checks in Route 53.

Step #5: Adding a routing policy between regions with Route 53

Now the last part — adding the routing policy between the two regional APIs deployed in Frankfurt and in Ireland.

Go into the Route 53 console and add a traffic policy as follows. Use the Target Domain Name created by API Gateway when configuring the custom domain name in order to set the endpoint value in the policy.

And finally, create a policy record DNS name with the traffic policy previously created.

Voilà! your multi-region, active-active backend is ready to be tested!

And it works like a charm!

Step #6: Testing the failing over feature in Route 53

Modify the value returned by of the beautifully crafted (sarcasm …) makehealthcheckcalls() function by setting the environment variable STATUS in the AWS Lambda console (in eu-west-1, Ireland) to 404 and save.

After a short while, you should see the health check status in Route 53 going from Healthy to Unhealthy. In the ideal world, you will also have created an alarm for the check.

Now, try creating a bunch of items into the database.

As you can see below, all the new items created have the eu-central-1 region as the origin (Frankfurt) — so the failover feature using the health check in Route 53 worked as expected.

That’s a wrap!

Now you might be wondering if that is it — well, yes. This post is an introduction to give you new ideas, and maybe challenge some old ones.

There are plenty of things you could do to improve the solution presented here, some of which could be adding authentication, monitoring, continuous integration and deployment, just to name a few.

The reason why I did not include authentication is simply that currently, Cognito does not support synchronization of user pools across multi-region — so one would need to hand build that. There already is a Forum thread of +1 to support that feature, so if you too would like that, add yours ;-)

In this post, we have gone through building a serverless multi-region, active-active backend, powered by DynamoDB Global Tables, AWS Lambda and API Gateway. We have also learned how to route traffic between regions using Route 53 while supporting a failover mechanism.

I am still blown away by this — since only a few years ago, it would have required a LOT more work. Back on the Christmas Eve of 2012, Netflix streaming service experienced an outage which pretty much was the starting point of their journey into building an active-active multi-region architecture. That’s also when I fell in love with resilient architectures — and I hope you too will get inspired and push the limits of what is currently possible in order to design the solutions of tomorrow.

In the next series of posts, I will talk about Chaos Engineering and especially discuss why breaking things should be practised. And I will use this serverless multi-region, active-active architecture presented here as the starting point for the experiments. Stay tuned!

[UPDATE] — I published VPC support for AWS Lambda and DynamoDB here!