Best practices for a successful chaos engineering journey

Unleashing the Full Potential of Chaos Engineering — Part 2

Unleashing the Full Potential of Chaos Engineering — Part 2

“Tell me and I forget, teach me and I may remember, involve me and I learn.” ― Benjamin Franklin

Welcome to Part 2 of the chaos engineering blog series! In Part 1, we took a deep dive into the various experimentation modes in chaos engineering, exploring their unique benefits and applications. Now, in Part 2, we will shift our focus to the essential best practices that will empower you to achieve success in chaos engineering. Building on the foundation laid in Part 1, we will delve into practical strategies and insights gathered from years of experience in the field.

Before we get started, a word of caution.

The best practices presented in this blog post are based on my personal experiences in chaos engineering. Over the course of more than a decade, I’ve had the privilege of gaining practical insights through my own experiments, as well as supporting colleagues, teams, and customers in their chaos engineering endeavors. However, please adapt them to your specific context and requirements, as every organization and system is unique. These practices should only serve as helpful guidance. They are not an exhaustive checklist. Experimentation, iteration, and patience are crucial for success in chaos engineering. Use these best practices as a reference, but be prepared to make adjustments based on your own experiences.

Buckle up!

(Note: BP stands for Best Practice.)

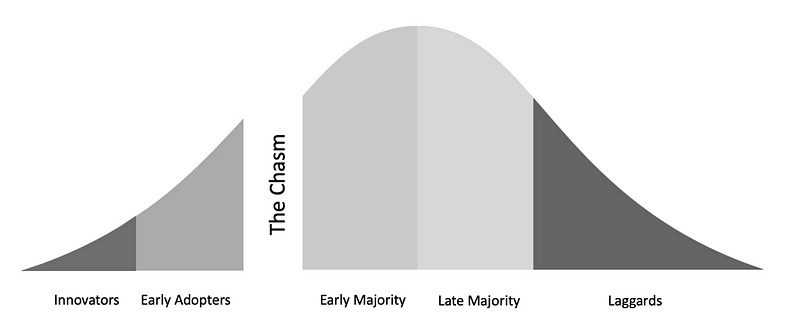

BP0 — Beware of the Chasm

The first best practice might be a bit of a surprise but that is something I see literally all the time and it prevents the wide adoption of chaos engineering within organizations.

According to the book called Crossing the Chasm by Geoffrey A. Moore., technology adoption starts with innovators and progresses to early adopters, early majority, late majority, and finally laggards. Innovators and early adopters are driven by their passion for technology and are willing to make sacrifices to be among the first to try new things. They’re the ones you see camping outside Apple stores for the latest iPhone release.

Then we have the early majority, who take a more cautious approach. They wait until they see tangible productivity improvements before embracing new technology.

The book talks about a significant gap, or “chasm,” between early adopters and the early majority when it comes to adopting a product. This transition is not smooth and poses challenges for companies.

Once a product gains traction among innovators and early adopters, it requires adapting to a new target market. This involves changes in product concept, positioning, marketing strategy, distribution channels, and even pricing.

This process of successfully transitioning from early adopters to the early majority is known as “Crossing the Chasm.” It highlights the importance of strategic adjustments and a focused approach to meet the unique needs of the early majority segment.

What’s fascinating about the “Crossing the Chasm” theory is that it applies not only to mainstream customers but also to the adoption of technology within a company itself. It helps explain why certain teams embrace new concepts and ideas earlier than others, and why different teams within a company can be so distinct from one another.

This is precisely why many companies venture into chaos engineering but struggle to make it a widespread practice throughout their organization. While some teams show interest and may even successfully start implementing it, they encounter challenges in establishing it as a standard discipline across the entire organization.

The reason behind this is that the team that experiments with and succeeds in chaos engineering might be part of the innovators or early adopters. They might have stumbled upon a winning formula and have individuals within the team who are willing to navigate the initial difficulties.

However, the rest of the organization doesn’t automatically follow suit. They need to be convinced of the productivity improvements before fully embracing the concept. They won’t simply buy into it blindly. So, convincing them becomes a crucial task. Which leads me to BP1.

BP1 — Understand the why and set goals for your journey

When introducing chaos engineering in your organization, it’s vital to begin by explaining the “Why” behind it. Many chaos engineering leaders tend to dive straight into the “what” and “how” without addressing the underlying purpose, assuming that everyone shares their interest in the technology itself. However, if you want to persuade others, especially business stakeholders, you need to emphasize the benefits that chaos engineering can bring to the organization.

To effectively highlight the “Why,” you should demonstrate how chaos engineering can enhance various aspects of the business.

A strong why, for example, would be linking chaos engineering to the cost of downtime and showcasing how it can mitigate that risk. This speaks to the business’s bottom line. Time to recovery is especially critical if you look at the the latest estimations from IDC and the Ponemon Institute.

Hundred thousand dollars — that’s the average cost per hour of infrastructure downtime.

Of course these are averages but that puts a lot of pressure on businesses. Now think again about your previous outages. How long did they last?

Understanding the “Why” is also crucial for setting goals in your chaos engineering practice. Clear goals focused on user experience, service recovery, adoption, experiments, or metrics are essential for long-term engagement and investment in chaos engineering. Leadership-driven goals, tracked through regular business reviews, provide momentum and commitment.

Finding suitable goals in your organization involves strategic and tactical considerations. Look for specific events or capabilities to prepare for, or align with existing senior leadership goals. The key is to have goals that align with your organization’s needs, giving purpose and direction to your chaos engineering efforts.

BP2 — Understanding the steady-state of your system

The primary objective of understanding your system’s steady-state is to ensure a smooth transition back to a well-established operating condition after introducing artificial failures, thus eliminating any interference with the system’s normal behavior.

Rather than focusing solely on internal system attributes like CPU or memory, it is crucial to identify measurable outputs that exhibit a predictable pattern in the system’s behavior, while significantly varying when failures are introduced. It is important that the steady state should remain stable even in the presence of failure, as the hypothesis is that your system is resilient against such faults.

Let’s consider some examples of steady states. Amazon.com, for instance, employs the number of orders as a key metric for its steady state. As the load time increases by a certain percentage, the number of orders and sales exhibit a corresponding decrease. This makes the number of orders an excellent indicator of the steady state.

Netflix adopts a server-side metric known as the “Pulse of Netflix,” which measures the number of playback starts initiated by users. They discovered that the “SPS” (starts-per-second) metric follows a predictable pattern and experiences significant fluctuations during system failures. This metric captures the essence of Netflix’s steady state.

Both Amazon’s number of orders and Netflix’s Pulse of Netflix represent robust steady states as they effectively combine customer experience and operational metrics into a single, measurable, and highly predictable output.

BP3 — Monitoring

Properly measuring your system is crucial for monitoring any deviations from the steady state. Investing in comprehensive measurements is essential, covering business metrics, customer experience, system metrics, and operational metrics. By capturing and graphing these measurements, unexpected correlations between metrics can often be discovered.

Two aspects of monitoring should be considered: monitoring the application itself and monitoring the chaos experiments. Both aspects are equally critical. By closely monitoring application metrics, deviations from expected behavior or performance can be detected early, enabling proactive measures to address issues. Similarly, monitoring chaos experiments is crucial for gathering valuable data on system resilience and identifying weaknesses. This data drives iterative improvements in system architecture, design, and recovery procedures.

To effectively monitor your applications during chaos experiments, consider the following guidelines:

Business metrics: Measure key performance indicators (KPIs) that align with your business goals. These metrics provide insights into how an experiment impacts your overall business, whether it relates to revenue or other critical aspects. Examples business metrics may be the number of orders placed, debit or credit card transactions, or flights purchased.

Application metrics: Monitor key performance indicators (KPIs) related to the application’s functionality, such as response time, throughput, error rates, and resource utilization. These metrics help assess the impact of the chaos experiment on the application’s performance.

Infrastructure and system metrics: Monitor the health and performance of the underlying infrastructure, including CPU usage, memory usage, network latency, queue length, throttling, and disk I/O. These metrics provide insights into how the infrastructure is affected by the chaos experiment.

Error and failure metrics: Keep track of error rates, failures, and exceptions generated during the chaos experiment. This helps identify any issues or vulnerabilities that arise under disruptive conditions.

Service dependency metrics: Monitor the behavior and performance of the services or components that the application relies on. This includes measuring response times, error rates, and throughput of external APIs, databases, or third-party services.

User Experience metrics: Capture user-centric metrics like page load times, transaction completion rates, or perceived latency. This helps understand how the chaos experiment impacts the end-user experience.

Operational metrics: Track operational metrics to ensure the sustainability and maintenance of your system. These metrics help understand the impact of an experiment on operational aspects of the application. These include number of tickets, time to [from detect to recovery].

Chaos dashboard: Creating a dashboard dedicated to chaos engineering experiments is a recommended practice that greatly aids the entire journey. During the experiment-design stage, it compels the implementation of specific metrics for hypothesis validation. Additionally, the dashboard facilitates the consolidation of various key metrics and allows for peer review. Throughout the execution of the chaos experiment, it provides a clear overview of both the failure injection status and the system under test. Finally, delving deeper into the dashboard enables a data-driven post-incident analysis.

BP4 — Logging

Collect and analyze application and system logs to gain detailed insights into the behavior of various components, trace requests, and identify any anomalies or failures. If possible, instrument logging to ensure that any impact caused by an experiment is easily identifiable in the logs. Logging is particularly helpful for investigating the effects of the experiment.

BP5 — Build a resilience model of your system and formulate hypotheses

To ensure your team’s assumptions about recovery paths and failure scenarios are accurate, it’s valuable to build a resilience model. This model helps determine the expected impact of failures and validate your team’s recovery strategies. Start by identifying the most and critical resource-intensive APIs and understanding their dependencies.

Having a clear picture of these dependencies is essential as the accuracy of your model, and thus the hypothesis, relies on it. By modeling system resilience, you can anticipate potential business losses during disruptions and gain insights into detection and prevention measures.

A resilience model also helps prioritize chaos experiments effectively. By mapping the impact of failures, you can show that choosing one experiment over another reduces the system’s risk by avoiding the most significant incidents.

Creating a resilience model might seem overwhelming at first, but you can start by asking simple “what if” questions and thoroughly exploring their consequences and timing. For example, consider scenarios like instance termination, cache failure, increased latency to dependencies, or database stoppage. By answering these questions, you’ll gradually build a comprehensive understanding of your system’s resilience.

Here’s an example answer to “What if the cache stops?”:

“When the cache stops responding to requests, the system will continue to respond to customer requests within 500ms. The application will detect the failed cache connection within 150ms (client timeout) and retrieve the data directly from the database. After encountering 5 client timeout errors, the circuit breaker will engage, bypassing the cache entirely and fetching data directly from the database. As a result, customer requests will be fulfilled within 300ms. An alarm will be triggered, and a Sev2 ticket will be created for the team if the circuit breaker remains engaged for more than 3 minutes.“

This answer becomes the hypothesis that will be verified through an experiment. Remember, chaos engineering experiments aim to confirm or refute assumptions about a system’s behavior under fault injection and ensure it performs as expected. The expected outcome of the experiment should be defined beforehand, and the actual execution of the experiment should provide data that builds confidence in the system’s ability to handle failures correctly.

BP6 — Foster a collective responsibility for hypotheses

To create a strong hypothesis, involve the whole team, not just one engineer. Gather everyone involved, including the product owner, technical product manager, developers, designers, architects, and more. Have each team member write their own response to the “What if…?” question, encouraging unique ideas and perspectives.

During the discussion, you’ll uncover different answers and some team members may not have considered the hypothesis. Use this opportunity to explore diverse perspectives on how the product should behave in different scenarios. Clarify expectations and revisit the product specifications.

By involving the entire team, you make hypothesis formulation a collective effort. This approach promotes collaboration and accountability, leading to better outcomes in the chaos engineering process.

BP7 — Control the blast radius of your experiments

When experimenting, it’s important to understand and minimize the potential impact caused by the experiment and any injected failures. Here are some key questions to consider:

How many customers will be affected?

Which functionality will be impaired?

Which locations will be impacted?

How will the experiment affect other teams or services?

To reduce risks, it’s recommended to start small with a new experiment and gradually expand its scope as more data is collected. This helps minimize the potential impact when exploring different failure scenarios until there’s enough evidence that the system can handle them correctly.

For example, begin with a single host, container, pod, or function. Once your service behaves as expected in this initial experiment, you can gradually increase the impact to include more components like a cluster, subnet or VPC, an availability zone, or even an entire region.

BP8 — Chaos engineering is a journey, and it doesn’t start in production environment

Chaos engineering in production offers undeniable benefits compared to non-production environments. Replicating production complexities like configuration, scale, and customer behavior is challenging outside of production. Non-production environments often lack crucial elements such as alarms, ticketing systems, and connections to third-party services. Real production traffic remains the most reliable way to capture the true behavior of the system.

However, it’s strongly advised against starting chaos engineering directly in production. Instead, begin in your local development environment, gradually progressing through beta, staging, and finally production. This gradual approach builds confidence and maturity, essential for successfully implementing chaos engineering or resilience practices.

Embarking on chaos engineering in production without adequate preparation can harm customer trust and brand credibility. Earn trust by showcasing expertise and understanding of the domain. Approach chaos engineering like a firefighter who learns extensively about fire before training with live flames. Earn credentials and demonstrate competence. Remember the fable of “The Tortoise and the Hare”: slow and steady wins the race. Conducting fault injection and chaos experiments in non-production environments provides valuable insights. For instance, stopping a database in your local environment using tools like Docker allows evaluation of the system’s response.

To provide a more accurate reflection of real-world conditions in non-production environments, consider integrating chaos experiments with load testing. Load testing lets you simulate system usage and behavior under various loads, helping to identify issues such as load balancing configurations, resource capacity limitations in the database, or bandwidth issues in the network. Without load testing, these issues may only surface in the production environment, impacting customer traffic and potentially causing large-scale outages lasting several hours.

Start with experiments in the development environment and gradually progress towards production. Learn from failures and replicate the same set of experiments across different environments, ideally up to production, until the hypothesis is confirmed. Replicating experiments ensure consistent system behavior throughout all stages.

BP9 — Inject failure in a safe manner, particularly when done in production

To safely inject failures in a production environment, it is crucial to follow certain guidelines. Firstly, it’s important to isolate the experiment from customer traffic as much as possible. The goal of chaos engineering in production is to verify the infrastructure without impacting regular production flow. To achieve this, conducting experiments during off-peak hours or low-traffic periods is recommended.

Utilizing the canary release pattern is one of the safest approaches for injecting failure in an application. This pattern involves creating a new environment with the latest software version and gradually rolling out the change to a small subset of users, known as the experiment group. By employing the canary release pattern, you can isolate the chaos experiment from the primary production environment and have better control over the potential failure impact. Having a dedicated environment for the experiment facilitates handling logs and monitoring information. It allows for a gradual increase in the percentage of requests directed to the canary chaos experiment, with the ability to roll back if errors occur. This approach provides a near-instant rollback mechanism.

To further mitigate risks and limit customer impact, consider utilizing various routing or partitioning mechanisms for your experiment. These mechanisms can include differentiating traffic between internal teams and customers, paying customers and non-paying customers, geographic-based routing, feature flags, or random assignment.

However, it’s important to note that the canary release pattern primarily validates the resilience of the canary environment, which may not fully reflect the resilience of the production environment due to potential subtle differences.

Additionally, establishing a Service Level Objective (SLO), error budget, and tracking the burn rate is crucial. These measures help determine whether it’s appropriate to conduct chaos experiments based on the available budget. An SLO sets the desired performance or reliability level, while the error budget defines the acceptable threshold of errors or disruptions within a specified time-frame.

By monitoring the burn rate of the error budget, you can ensure that chaos experiments are only conducted when the budget allows. This approach helps maintain a balance between valuable resilience experiments and system stability and reliability.

BP10 — Prepare a rollback and mitigation strategies

When conducting experiments, especially in production, it’s essential to have a plan to roll back any changes or mitigations if things go out of control or unexpected consequences arise. Being prepared to quickly return to a stable state is crucial for maintaining system availability.

There’s always some uncertainty and risk involved, even with careful planning and execution. Issues may surface that affect system performance or stability. That’s why having a rollback plan is like a safety net, allowing teams to respond promptly and effectively.

The rollback plan should outline the necessary steps and procedures to undo any changes made during the experiment. It should include detailed documentation, scripts, or automation tools to streamline the rollback process. Allocating enough resources, such as skilled personnel or specialized tools, is also important to ensure a swift and efficient rollback.

With a well-defined rollback plan in place, teams can proactively address any negative effects caused by chaos experiments, reducing potential downtime or impact on customers.

BP11 — Introduce randomness

It’s important to introduce randomness in various aspects of the chaos experiments, like timing, experiment types, and parameters.

By randomizing the timing of execution, you can simulate different traffic patterns and load conditions, capturing the system’s behavior under varying workloads and unpredictable traffic. This helps uncover weaknesses that may only appear under specific conditions.

Introducing randomness in experiment types and parameters allows for a wide exploration of failure scenarios. By varying the types of failures and adjusting parameters like duration, intensity, or frequency, you can assess the system’s ability to handle different failure modes.

Embracing randomness mimics the dynamic nature of the production environment and helps uncover potential issues that more controlled experiments may miss. However, it’s crucial to strike a balance between randomness and control. Responsible chaos engineering focuses on controlled chaos, applying randomness within safe limits while effectively challenging the system.

BP12 — Conduct a post-incident analysis after each experiment

Performing a post-incident analysis, also known as a postmortem, after each experiment is crucial in chaos engineering. It helps us understand why failures occur and enables us to prevent similar ones in the future. Learning from mistakes, whether they’re related to technology, processes, or organization, is vital. It’s important to create an open and transparent environment focused on learning and improvement, rather than assigning blame.

Conducting a post-incident analysis is valuable for every experiment, regardless of the outcome. Even if an experiment is successful and doesn’t cause any issues, it’s still beneficial to evaluate how the system responded. Gathering concrete data to validate the experiment’s results is always recommended.

BP13 — Include Chaos Engineering as part of Operational Readiness Reviews (ORR)

The Operational Readiness Review (ORR) is a valuable process utilized at AWS to assess the operational state of a service. It involves a set of curated questions and best practices that capture lessons learned from previous incidents. By following these guidelines, teams can identify risks and implement best practices to prevent future incidents. ORRs are conducted throughout the entire lifecycle of a service, from inception to post-release operations.

Within the ORR process, one notable question is “What is your GameDay plan?”. This question prompts teams to consider dependencies and potential component failures, requiring them to create a comprehensive plan to validate the expected failure and recovery scenarios.

ORRs serve as an effective mechanism to promote the adoption of chaos engineering practices and ensure operational readiness. By following the ORR guidelines, teams can enhance their resilience and readiness in managing and recovering from incidents.

BP14 — People are key to success

People play a vital role in ensuring service availability. When an experiment fails and the service can’t recover on its own, having a well-trained and well-equipped team becomes crucial for timely recovery. It’s important to assess whether your team has the necessary tools for logging, monitoring, debugging, tracing, and manual service recovery. These tools help with incident response, finding the root cause, and resolving issues. Regular training, knowledge sharing, and learning from chaos engineering experiments contribute to their preparedness and confidence in handling outages. By acknowledging the importance of humans and providing the right resources, you can boost your team’s ability to handle service disruptions and maintain high availability.

BP15 — Fix known high risk issues before running another experiment

Before moving on to another experiment, make sure to address any known high-risk issues. It’s essential to prioritize fixing the findings from your chaos experiments rather than focusing solely on developing new features. Get support from upper management and stress the importance of dealing with current issues.

To emphasize the significance of addressing these risks, explain the potential business impact of inaction compared to the continuous emphasis on feature development. For instance, quantify the potential customer impact and the time frame for the identified issue to occur. This approach helps stakeholders understand the consequences of neglecting risk mitigation. Remind them of the cost of downtime discussed earlier.

BP16 — Experiment, often

The system under test is constantly evolving, with new features, changing environments, and evolving client usage. To keep up, we need a culture of continuous chaos experimentation. Regular experiments assess resilience, uncover weaknesses, and validate the system’s ability to handle different scenarios. It prepares teams for unexpected challenges and keeps the system adaptable. Finding the right balance in experiment frequency enhances resilience while managing the budget. Embracing continuous chaos experimentation ensures resilience as the system evolves

BP17 — Start today

While it’s crucial to follow best practices, it’s equally important to avoid getting stuck in analysis paralysis. The key is to prioritize starting with chaos engineering because those who take the initiative are the ones who can make progress. Waiting for everything to be perfect will only delay your journey.

By starting now, you can begin learning and gaining confidence without the need for perfection. It’s okay to hack your way through and perform tasks manually if needed, as long as you don’t impact production traffic. Starting now is about taking action, acquiring knowledge, and becoming more comfortable with chaos engineering. You don’t have to be an expert to learn the fundamental lessons.

When Amazon started in 2001 — Jesse Robbins, who was known as Master of Disaster — started by unplugging servers in data-centers. Over time, that program he called GameDay evolved and has become more sophisticated. But the first step was more than 20 years ago!

The truth is we learn more from the process of pursuing excellence than from achieving it.

While being prepared is important, I can’t think of any better best practices to chaos engineering than the willingness to start.

That’s all, folks. I hope you have enjoyed this series and I wish you a successful chaos engineering journey. Don’t hesitate to give some feedback, share your opinion, or clap your hands. Thank you!

Adrian

—

Subscribe to my stories here.

Join Medium for $5 — Access all of Medium + support me & others!